Here’s some food for thought: for every $92 companies spend on driving traffic, they invest only about $1 in converting it.

While the exact ratio will vary by industry and over time, acquisition still tends to get the lion’s share of the budget compared with optimization and experimentation.

We're pouring money into ads, SEO and content marketing all to drive traffic, and then we just... hope our website works? Hope the design convinces? Hope people click the right buttons?

So that's why you test stuff.

Except now you face a different problem: which kind of testing? You've stumbled across A/B testing in some blog post. Then someone mentions multivariate testing in a webinar. Your colleague swears by split testing (which, confusingly, is almost the same as A/B testing).

And now you're paralyzed, wondering if you're about to waste three weeks testing the wrong thing in the wrong way.

I get it. When I first started in conversion optimization, I spent an embarrassing amount of time Googling "A/B testing vs multivariate testing" at 2 AM.

And everyone acts like the choice is obvious when it absolutely isn't, at least not until you understand what each method does and when it makes sense for your specific situation.

This article clears up that confusion, once and for all. Let’s begin.

TL;DR

|

What is A/B Testing?

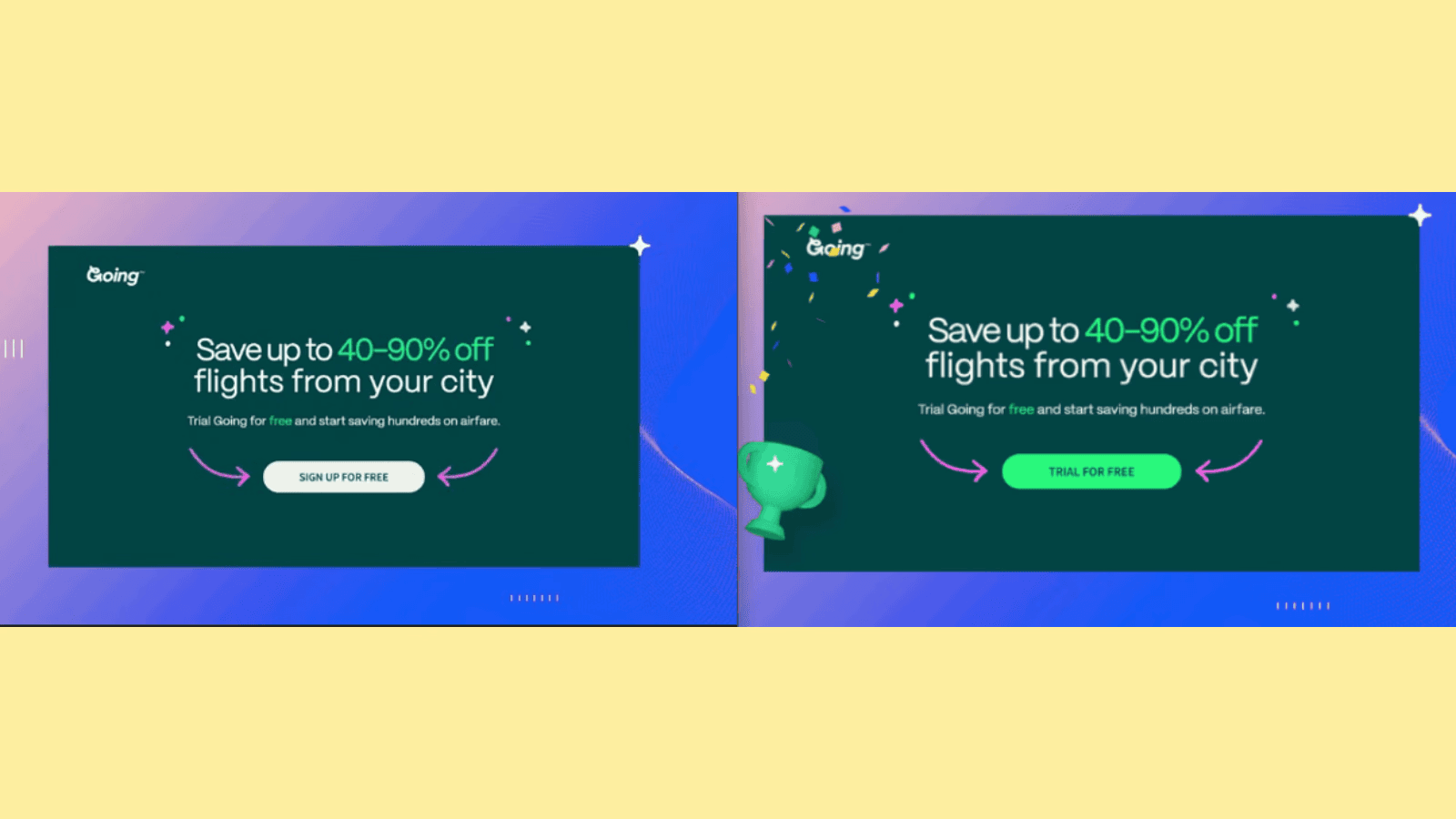

A/B testing is the practice of comparing two versions of something to determine which performs better against a specific goal. You show version A to half your audience and version B to the other half, then let the data tell you which one wins.

The beauty of A/B testing lies in its simplicity. You're changing exactly one variable, be it a headline, a button color, a call-to-action or a pricing structure, while keeping everything else constant. This isolation is what gives A/B testing its power. When version B outperforms version A by 23%, you know exactly what caused that lift. There's no ambiguity, no confounding factors muddying the waters.

A proper A/B test requires:

a clear hypothesis,

a meaningful sample size, and

statistical significance.

You need enough traffic to overcome random variance, and you need to run the test long enough to account for weekly patterns in user behavior. A test that runs only on weekends might give you completely different results than one that captures weekday traffic.

The methodology is straightforward:

You split traffic randomly and evenly between your control (A) and your variant (B).

Then you measure a primary metric. It could be conversion rate, click-through rate, revenue per visitor or whatever aligns with your business goal.

Then you wait until you reach statistical significance, typically 95% confidence, meaning there's only a 5% chance your results are due to random luck.

What makes A/B testing so valuable in practice is that it kills assumptions. Your designer might be convinced that the minimalist layout will convert better. Your copywriter might swear the clever wordplay will resonate. A/B testing doesn't care about opinions, it cares about what makes a difference with real users.

Keep in mind that A/B testing tells you what works, but not necessarily why. It's also sequential by nature; you test one thing, implement the winner, then test the next thing. This works brilliantly for optimization but can feel slow when you're trying to redesign an entire experience.

What is Multivariate Testing?

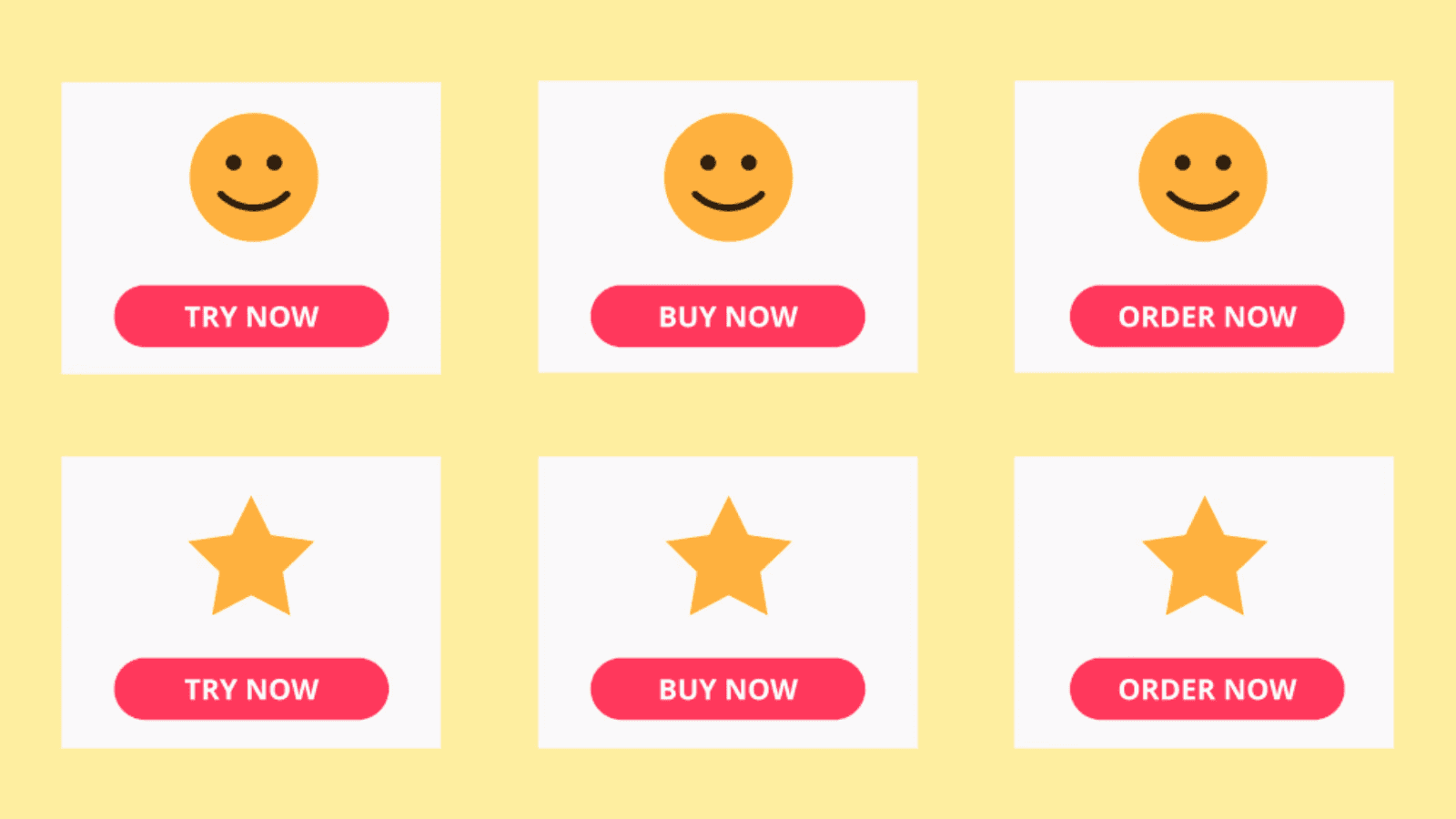

Multivariate testing takes the core concept of A/B testing and amplifies it. Multivariate tests let you to test multiple variables simultaneously and understand how they interact with each other.

Instead of testing headline A versus headline B, you're testing headline A versus headline B, combined with image X versus image Y, combined with button copy 1 versus button copy 2, all at once.

The mathematics here gets complex quickly.

Imagine a page with:

3 possible headlines

2 hero images

2 button colors

That gives you 3 × 2 × 2 = 12 unique combinations.

Similarly, four elements? Sixteen combinations. This exponential growth is both the power and the peril of multivariate testing.

Multivariate tests demand a lot more traffic than A/B tests. If your site gets 100,000 visitors monthly, you can run effective A/B tests. But a multivariate test with sixteen combinations would need 1.6 million monthly visitors to get similar statistical power for each variant. This is why multivariate testing is typically reserved for high-traffic pages or companies with massive user bases.

Now, there are two main approaches to multivariate testing.

Full factorial testing examines every possible combination, giving you complete interaction data but demanding enormous traffic.

Fractional factorial testing uses statistical modeling to test only a subset of combinations while still inferring the impact of each element. It's more practical but loses some precision around interactions.

If you're trying to optimize a high-traffic landing page and you genuinely don't know which combination of elements will resonate, multivariate testing can shortcut months of sequential A/B tests. But if you're testing radically different concepts or working with limited traffic, sequential A/B testing will get you better answers faster.

The nuance that separates experts from amateurs here is knowing when each methodology fits. Use A/B testing to validate big strategic bets and iterate on individual elements. Use multivariate testing when you need to optimize the interaction between multiple elements on a page that already converts reasonably well. The goal isn't to always run the most sophisticated test—it's to run the right test for the question you're trying to answer.

The Difference Between A/B Testing and Multivariate Testing

Look, you already understand the mechanics of both methodologies. But that’s not enough

The reality is that websites on average convert around 2.35% of their visitors, which means roughly 98% of your traffic leaves without converting. Both A/B and multivariate testing are tools to chip away at that 98%, but they do it differently.

Here’s the differences you need to understand:

The fundamental distinction

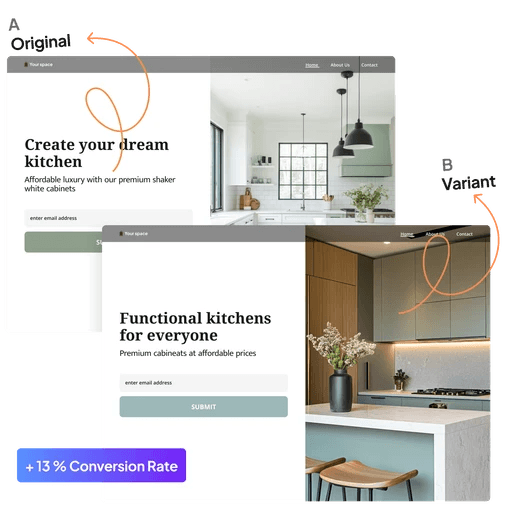

Let's start with the main distinction that shapes everything else. A/B testing is about comparing entire experiences. You're testing version A against version B, where those versions might be radically different from each other

Multivariate testing, by contrast, is about dissecting an experience into its component parts. It's the difference between comparing two fully-furnished rooms and systematically testing different combinations of furniture, lighting, and wall colors to design the perfect room from scratch.

This distinction matters a lot. When you run an A/B test and variant B wins by 15%, you know that something about that version connected with users. But you don't necessarily know what. Was it the headline? The imagery? The layout? The answer is "all of the above, working together."

Multivariate testing gives you a different kind of insight…it tells you the individual contribution of each element and, critically, how those elements interact with each other.

Differences in traffic requirements

Traffic requirements tell you a lot about when each method makes sense.

A/B testing splits your traffic between two (or maybe three or four) variations. If you're getting 10,000 visitors monthly and you split them evenly between two variants, each version sees 5,000 visitors.

That's usually enough to reach statistical significance within a reasonable timeframe, especially if you're testing something that meaningfully impacts conversion.

Multivariate testing has a much more aggressive appetite for traffic. Remember that exponential growth we talked about earlier? Every additional variable you test multiplies the number of combinations

Testing 3 elements with 2 variants each = 8 unique combinations

Testing 4 elements with 2 variants each = 16 combinations

Testing 5 elements with 3 variants each = 243 combinations

Your 10,000 monthly visitors now split into groups of roughly 1,250 each for that first scenario. Testing four elements with two variants each? Sixteen combinations. Now each variant sees only 625 visitors monthly, and your test needs to run for months to achieve statistical significance…assuming your conversion rate is healthy enough to generate meaningful data from those sample sizes.

Multivariate testing is a high-traffic game. We recommend having at least 10,000 visitors per month before considering multivariate testing, and that's really the bare minimum.

If you're working with a site that gets 50,000 or 100,000 monthly visitors, multivariate testing becomes viable. Below that threshold, you're better off running sequential A/B tests.

When to use each method

But traffic volume isn't the only consideration. Complexity matters too. A/B testing excels when you're making big bets — use A/B testing when you want to redesign our entire checkout flow or when you are thinking of moving from a multi-step form to a single-page form.

Multivariate testing shines when you're optimizing a page that already converts reasonably well and you want to squeeze additional performance from it.

You know the overall structure works, but you're uncertain about the optimal combination of elements within that structure. Should the headline emphasize speed or reliability? Should the CTA be green or orange? Should testimonials appear above or below the fold?

Multivariate testing can answer all these questions simultaneously and reveal interaction effects that sequential A/B tests would never uncover.

Difference in analysis complexity

When an A/B test concludes, interpretation is straightforward. Variant B converted at 3.2% versus variant A's 2.7%, with 95% statistical confidence. You implement the winner and move on. The data is clean, the decision is clear, and your entire team can understand what happened and why.

Multivariate test results require more sophisticated analysis. You're trying to understand the relative impact of each element and how they interact. This typically involves statistical techniques like ANOVA (analysis of variance) to determine which factors matter most.

Let me give you a concrete example of when each approach makes sense. Suppose you're optimizing an e-commerce product page that's getting 100,000 visitors monthly and converting at 2%. You've been tasked with improving that conversion rate. The A/B Testing Scenario If you have a hypothesis that adding video product demonstrations would impact conversion, that's an A/B test. You create one variant with video and one without, split your traffic evenly, and within a week or two you have a clear answer. The test is decisive, the implementation is straightforward, and you quickly learn whether video makes any difference. The Multivariate Testing Scenario But let's say you've already validated that video helps, and now you want to optimize the page further. You're debating between four different headline approaches, three different button colors, and two different layouts for customer reviews. You could run three sequential A/B tests. That would take months and assumes that these elements don't interact with each other. Or you could run a single multivariate test that examines all these elements simultaneously. With your high traffic volume, you can test all 24 combinations (4 × 3 × 2) and discover not just which individual elements work best, but which combinations work best together. effect. |

Time investment differences

A/B tests typically run for one to four weeks, depending on your traffic and conversion rates. You can launch a test on Monday and have actionable results by month's end. This speed makes A/B testing perfect for iterative optimization. You test something, implement the winner, and move on to the next hypothesis.

Multivariate tests demand patience. Depending on your traffic, the number of variables, and the subtlety of the changes, a multivariate test might need to run for several months to reach statistical significance across all combinations.

If you're testing subtle variations (say, three different headline phrasings that are all fairly similar) you need even more data to detect differences in performance. The longer timeline means you're making a bigger upfront commitment and you can't pivot as quickly if market conditions change.

Finally, here’s a quick comparison of both:

A/B Testing | Multivariate Testing | |

What you're testing | Entire page versions or single elements in isolation | Multiple elements simultaneously to find optimal combinations |

Number of variations | 2-4 variations typically | 8-100+ variations depending on elements tested |

Traffic requirements | Low to moderate (1,000-10,000+ monthly visitors) | High (10,000+ monthly visitors minimum, preferably 50,000+) |

Time to results | 1-4 weeks typically | Several weeks to months |

Best used for | Major design changes, strategic decisions, validating big bets | Fine-tuning high-traffic pages, understanding element interactions |

Complexity | Simple to set up and analyze | Complex setup and statistical analysis required |

What you learn | Which overall version performs better | Which elements matter most and how they interact with each other |

Interpretation | Straightforward—clear winner emerges | Requires statistical expertise (ANOVA, interaction analysis) |

Goal | Find the better overall experience quickly | Discover the mathematically optimal combination of elements |

Ideal for | Sites with limited traffic, testing radical changes, quick iteration | High-traffic sites, optimization of proven pages, systematic knowledge building |

Example use case | Testing completely redesigned checkout flow vs. original | Testing 3 headlines × 2 images × 2 button colors on high-traffic landing page |

A/B Testing Vs Multivariate Testing: Choosing the Right Tool for the Job

Use A/B testing to validate big strategic bets, test completely different designs, and quickly iterate when traffic is limited.

Use multivariate testing to fine-tune high-traffic pages, understand element interactions, and build systematic knowledge about what drives conversion.

Also remember that resource requirements extend beyond just traffic. A/B testing is relatively simple to set up and manage. Most teams can handle it with minimal specialized expertise.

Multivariate testing requires more statistical knowledge, more robust testing infrastructure, and more careful planning to ensure you're testing variables that actually matter. If you're just getting started with optimization, A/B testing is the right choice. Once you've built your optimization muscle and your site traffic supports it, multivariate testing becomes a powerful addition to your capabilities.

A/B Testing Process vs. Multivariate Testing Process

While both methodologies share some common ground (they're both about making data-driven decisions, after all) the processes diverge in meaningful ways that affect how you plan, execute, and analyze your tests.

The A/B Testing Process

Step 1: Identify the problem and form a hypothesis

Start by pinpointing what's not working. Your checkout abandonment rate is 68%? Your email click-through rate is abysmal? Your landing page converts at 1.2% when the industry average is 2.5%? That's your problem.

Now form a specific, testable hypothesis, like Adding trust badges above the checkout button will reduce abandonment by reassuring first-time customers about payment security.

Step 2: Define your success metric

Decide what you're measuring before you start. This sounds obvious, but you'd be surprised how many tests launch without a clear primary metric.

Pick one primary metric, like conversion rate or time-on-page, that aligns with your goal. You can track secondary metrics too, but you need one north star to guide your decision.

Step 3: Create your variant

Build version B based on your hypothesis. Keep the changes meaningful but focused. If you're testing trust badges, add trust badges. Don't also change the headline, button color, and page layout. You want to know what caused the difference in performance.

Step 4: Determine sample size and test duration

Use a sample size calculator to determine how many visitors each variant needs to reach statistical significance.

Factor in your current conversion rate, your expected improvement, and your desired confidence level (typically 95%). This tells you how long your test needs to run given your traffic volume.

Step 5: Split traffic and launch

Use your testing platform to randomly split traffic 50/50 between the control and variant.

Make sure the split is truly random. Do not send mobile users to one version and desktop users to another unless that's specifically what you're testing.

Step 6: Monitor (but don't touch)

Resist the urge to peek at results every hour and declare a winner. Let the test run its full course. Check periodically to ensure your testing platform is working correctly and traffic is splitting as expected, but don't stop the test early just because one variant is winning.

Step 7: Analyze results

Once you've reached statistical significance, analyze the data. Did variant B win? By how much? Look at your secondary metrics too. Sometimes a variant increases clicks but decreases actual conversions. Context matters.

Step 8: Implement and document

Roll out the winner to all traffic. Document what you tested, what you learned, and why it worked. This builds institutional knowledge for future tests.

The Multivariate Testing Process

Step 1: Select the page and elements to test

Choose a high-traffic page that already converts reasonably well. You're optimizing, not fixing. Identify 3-5 specific elements you want to test.

Elements commonly worth testing include

Headline (the message and value proposition)

Hero image or video (visual communication)

CTA button (text, color, size, placement)

Form length and fields

Social proof placement and type

Trust indicators and security badges

Be strategic here because each additional element exponentially increases your test complexity.

Step 2: Create variants for each element

For each element, create 2-3 distinct variants. If you're testing headlines, don't create five versions that are 90% identical. Make them meaningfully different so you can detect performance differences.

Step 3: Calculate traffic requirements

This is important. If you're testing 3 elements with 3 variants each, you have 27 combinations. Each combination needs enough traffic to reach statistical significance.

Use a multivariate testing calculator to determine if you have enough traffic. If you don't, reduce the number of elements or variants you're testing.

Step 4: Choose your testing approach

Decide between full factorial testing (testing every combination) or fractional factorial testing (testing a subset and using statistical modeling to infer the rest).

Full factorial gives you complete data but needs massive traffic. Fractional factorial is more practical but requires more sophisticated analysis.

Step 5: Set up your test matrix

Configure your testing platform to serve all combinations randomly. This is more complex than A/B testing setup and usually requires more technical expertise.

Make sure your analytics can track which combination each visitor sees.

Step 6: Launch and monitor

Deploy your test and monitor for technical issues. With so many combinations running simultaneously, there's more that can go wrong. Check that all variants are serving correctly and tracking properly.

Step 7: Let it run (longer)

Multivariate tests need more time than A/B tests. We're talking weeks to months, not days to weeks. You need enough data across all combinations to reach statistical significance. Patience is essential.

Step 8: Perform statistical analysis

Do not just look for a winning combination; you're analyzing which elements had the most impact and how they interacted.

Use ANOVA or similar statistical techniques to determine

Which elements had statistically significant impacts on conversion

How much each element contributed to the outcome

Whether there were interaction effects between elements

Which combinations performed best and worst

Step 9: Implement and extract principles

Roll out the winning combination. More importantly, extract the underlying principles.

If action-oriented headlines outperformed descriptive headlines across most combinations, that's a principle you can apply elsewhere without testing.

When to Conduct A/B Testing

A/B testing is fast, it's flexible, and it works with almost any traffic level. But knowing when to deploy it and how to execute it properly makes the difference between tests that drive improvement and tests that waste time learning nothing.

Use A/B testing when:

You're testing major changes

Any time you're considering a significant design overhaul, A/B testing is your friend. Thinking about switching from a multi-step form to a single-page form? That's an A/B test. Considering a complete homepage redesign? A/B test it. These big changes warrant testing the entire experience rather than dissecting individual elements.

You have a clear hypothesis

A/B testing works best when you have a specific hypothesis you want to validate. "Adding video testimonials will increase trust and improve conversion" is a perfect A/B test hypothesis. It's specific, it's actionable, and it can be clearly validated or invalidated.

Your traffic is limited

If your page gets fewer than 10,000 visitors monthly, A/B testing is likely your only viable option. You simply don't have the traffic volume to split across dozens of multivariate combinations and get meaningful results in a reasonable timeframe.

You need results quickly

When you're under pressure to improve conversion rates fast, A/B testing delivers. Most A/B tests reach statistical significance within 2-4 weeks, sometimes faster with high traffic and strong conversion lifts.

You're testing completely different concepts

When your variants are radically different, with different value propositions, different visual approaches, and different user flows, A/B testing is the right choice. You're comparing apples to oranges, and you want to know which fruit your users prefer.

When to Conduct Multivariate Testing for Web Pages

Multivariate testing is not the right choice for every situation, but when the conditions align, it can deliver insights that A/B testing simply cannot match.

Use multivariate testing when

Your page already converts well

Multivariate testing is for optimization. If your landing page converts at 0.5% and the industry standard is 3%, you don't need multivariate testing…you need a redesign. But if you're converting at 2.8% and want to hit 3.5%, multivariate testing can find those marginal gains.

You have significant traffic

You need at least 10,000 monthly visitors at an absolute minimum, and 50,000+ is better.

Remember, each combination in your test needs enough traffic to reach statistical significance. With low traffic, you'll wait months for results or, worse, implement changes based on statistically meaningless data.

You're uncertain about element interactions

If you suspect that certain elements perform differently depending on what other elements are present, multivariate testing reveals these interactions.

Maybe your aggressive CTA works great with social proof but poorly with feature lists. You'd never discover this interaction running sequential A/B tests.

You want to optimize an important, high-traffic page

Your homepage, your primary landing page, your checkout page—these are candidates for multivariate testing if they get enough traffic. The ROI of optimization is highest on pages where small improvements affect many visitors.

You're building a design system

Multivariate testing helps you understand which design principles work best together. The insights can inform your entire design system, making it more than just a one-page optimization exercise.

A/B Testing vs Multivariate Testing: Pros and Cons

Every testing methodology comes with tradeoffs. There's no perfect approach that works in every situation, only the right tool for the job at hand. Understanding the strengths and limitations of each method helps you choose wisely and set realistic expectations for what you'll learn.

Pros of A/B Testing

Simple to understand and execute

A/B testing is beautifully straightforward. You're comparing version A to version B. Your entire team can understand what you're testing and why, from your CEO to your intern. This simplicity means faster buy-in, easier implementation, and fewer opportunities for mistakes.

Works with limited traffic

You don't need millions of visitors to run effective A/B tests. Even sites with a few thousand monthly visitors can get meaningful results, especially when testing changes that have substantial impact on conversion. This accessibility makes A/B testing viable for small businesses, startups, and individual pages that don't get massive traffic.

Fast results

Most A/B tests reach statistical significance within 1-4 weeks. When you're under pressure to improve metrics quickly or you're working in a fast-moving market, this speed is invaluable. You can run a test, implement the winner, and move on to the next optimization within a month.

Lower technical requirements

Setting up an A/B test doesn't require a statistics PhD. Most modern testing platforms make it point-and-click simple. Your marketing team can set up and run tests without constantly pulling in data scientists or engineers.

Cons of A/B Testing

Doesn't tell you element-level insights

When variant B wins, you know the overall package performed better, but you don't know which specific elements drove that performance. If your winning variant had a different headline, hero image, and CTA, which one actually mattered? A/B testing can't tell you.

Misses interaction effects

Elements on a page don't exist in isolation, they interact with each other. An aggressive headline might work great with one visual style but terribly with another. A/B testing is blind to these interactions. You might optimize each element sequentially through A/B tests and still end up with a suboptimal combination.

Risk of local maximum

When you test one element at a time, you might optimize toward a local maximum rather than finding the global optimum. You improve your headline, then your image, then your CTA, but the optimal combination might involve different choices that work better together even if they don't win individually.

Pros of Multivariate Testing

Reveals element-level impact

This is the superpower of multivariate testing. You don't just learn which combination won, you learn how much each individual element contributed to that win. You discover that headlines account for 60% of the performance variance while button color accounts for 5%. This insight tells you where to focus future optimization efforts.

Uncovers interaction effects

Multivariate testing shows you how elements work together. You discover that testimonials boost conversion when paired with feature-focused headlines but decrease conversion when paired with benefit-focused headlines. These interaction effects are invisible to A/B testing but can be critical to optimization success.

More efficient than Sequential A/B tests

Instead of running five sequential A/B tests over three months, you run one multivariate test that examines all five elements simultaneously. Yes, the multivariate test takes longer than a single A/B test, but it's much faster than running A/B tests sequentially.

Finds the global optimum

Because you're testing elements in combination, you're more likely to find the truly optimal design rather than settling for a local maximum. You're not constrained by the path you took with sequential testing. Instead, you're exploring the entire solution space simultaneously.

Cons of Multivariate Testing

Requires massive traffic

This is the killer limitation. You need at least 10,000 monthly visitors minimum, and realistically 50,000+ for most multivariate tests to be viable. Each combination needs enough traffic to reach statistical significance, and that requirement scales exponentially with complexity. Most websites simply don't have enough traffic to run multivariate tests effectively.

Takes much longer to complete

Where A/B tests might conclude in 2-4 weeks, multivariate tests often run for 2-4 months or longer. This extended timeline means you're making a bigger upfront commitment, and you can't pivot quickly if market conditions change or if you realize mid-test that you're testing the wrong things.

Complex to set up and analyze

Multivariate testing isn't point-and-click simple. Setting up the test matrix, ensuring proper tracking across all combinations, and performing the statistical analysis requires more technical sophistication. You often need data scientists or experienced analysts to properly interpret results.

The honest assessment

A/B testing is the reliable workhorse that works for almost everyone in almost every situation. It's not the most sophisticated tool, but it's the most practical one. You can start running A/B tests tomorrow with minimal resources and start learning immediately.

Multivariate testing is the specialist tool that delivers superior insights when conditions are right. Those conditions, like high traffic, pages that already convert well, specific optimization goals, statistical expertise don't exist for most websites most of the time. But when they do exist, multivariate testing delivers insights that A/B testing cannot match.

Fibr AI Puts A/B and Multivariate Testing on AutoPilot

Once you start treating A/B and multivariate testing as a habit rather than a one off project, the bottleneck usually shifts from ideas to execution. Someone has to set up variants, wire targeting, check the data, and keep tests going on.

That is where a platform like Fibr AI really fits like a glove.

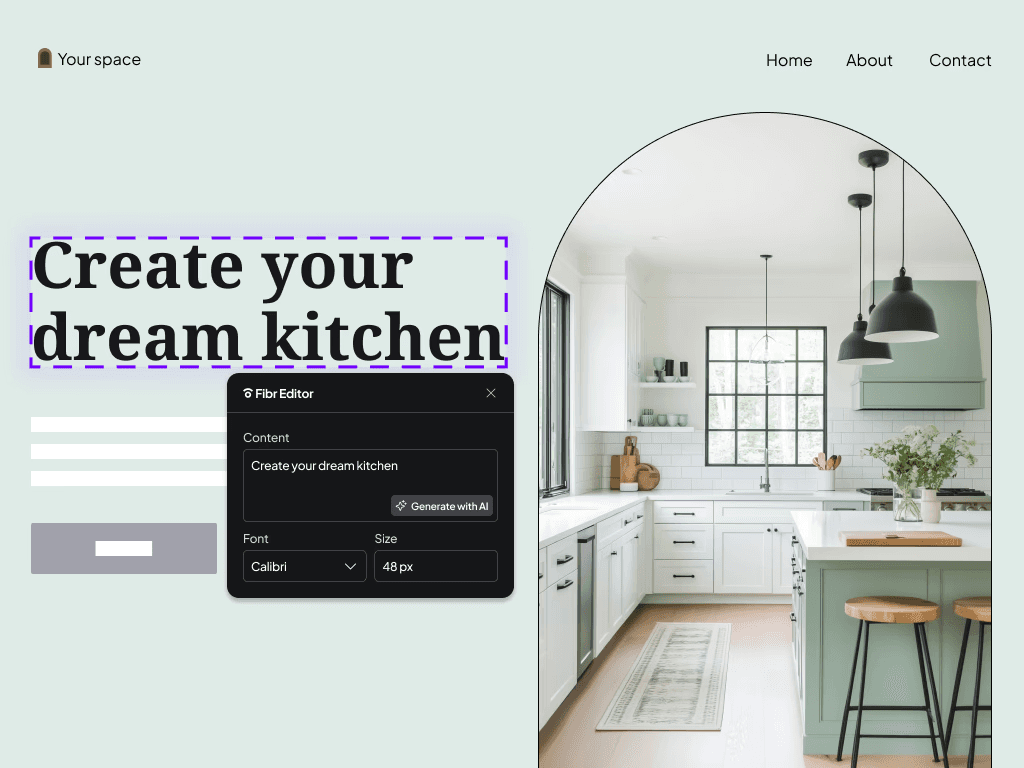

Fibr AI sits on top of your site as an AI powered CRO layer that runs tests, personalizes content, and monitors performance in the background so your team is not babysitting every experiment.

Fibr gives you both the mechanics of testing and the intelligence on top of it.

At the core, you get a lifetime free A/B testing platform where you can create, run, and analyze tests on any webpage. That includes a visual WYSIWYG editor for changing headlines, CTAs, and UI elements without touching code, plus AI suggestions for copy variations.

On top of simple A/B tests, Fibr allows you to work with multiple variants per element and target different audiences, which brings you close to multivariate style experimentation without forcing you into an overly complex stats workflow. You can set up several variations of a section, aim them at specific segments, and let the AI allocate traffic and learn.

Max, the AI testing agent, is designed to reduce the manual grind. It can help generate hypotheses, build variants, and launch tests in minutes with no developers or spreadsheets in the loop, which matters if your team is small but your traffic is meaningful.

These are more Fibr features for A/B and multivariate workflows

Lifetime free A/B testing: Run unlimited A/B tests on any webpage on a forever free plan, which lowers the barrier for getting an experimentation culture off the ground.

No code visual editor: A WYSIWYG editor that lets marketers and product folks change headlines, images, CTAs, and layouts directly, instead of waiting in the dev queue.

AI powered variant creation and ideas: AI suggestions for copy and layout variations, plus Max as an AI testing agent to propose hypotheses and create experiments quickly

Real time personalization for anonymous visitors: AI agent Liv adapts content on the fly based on interaction patterns, visit count, location, and language so the same page can behave differently for different segments without separate builds.

Always on monitoring and performance protection: AI agent Aya keeps an eye on uptime, threats, and performance issues, with alerts that let you fix problems before they quietly drag down conversion rates.

Deep analytics and GA4 integration: Experiments and personalization campaigns plug into GA4, so you can see attributed revenue and test impact alongside your existing analytics, instead of yet another silo.

If you are ready to move from occasional tests to always on experimentation, try setting up your next A/B or multivariate style test in Fibr.

Start your 30-day free trial and experience all these features hands-on.

About the author

FAQs

What's the main difference between A/B testing and multivariate testing?

A/B testing compares two or more complete versions of a page to see which performs better overall.

Multivariate testing examines multiple individual elements simultaneously to find the optimal combination and understand how elements interact with each other. You can picture A/B testing as comparing two finished outfits, while multivariate testing is mixing and matching individual pieces to find the best combination.

How long should I run an A/B test?

Run your A/B test for at least one full week, preferably two, even if you reach statistical significance earlier. User behavior varies by day of week, and you need to capture that full pattern. Most A/B tests reach statistical significance within 1-4 weeks. Never stop a test after just a few days, no matter how promising the results look.

Can I run multiple A/B tests on the same page simultaneously?

Technically yes, but it's generally not recommended unless you have massive traffic. Running multiple tests simultaneously can create interaction effects that muddy your results. You won't know if the performance change came from test A, test B, or the interaction between them. If you want to test multiple elements simultaneously, that's exactly what multivariate testing is designed for.

What's statistical significance and why does it matter?

Statistical significance (typically 95% confidence) means there's only a 5% chance your results occurred by random luck rather than a real difference between variants. Without statistical significance, you're essentially making decisions based on noise.

What if my A/B test shows no significant difference?

It means the change you tested doesn't meaningfully impact user behavior. Don't keep running the test hoping for different results. Document the finding and move on to test something else. Inconclusive tests tell you where not to focus your optimization efforts.

Can I use A/B testing for mobile apps?

Absolutely. The same principles apply whether you're testing websites, mobile apps, emails, or any digital experience. Most modern testing platforms support mobile app testing. Just ensure you're testing one platform at a time unless you have enough traffic to split between web and mobile.